Notes on Neural Networks

Updated on

Neural networks imitate the cognitive function of the brain to approximate intelligent machines1. The neuron, shown in the figure below, is the fundamental building block of the human brain. The neuron is a special type of cell that processes and transmits information electrochemically. Individually each neuron receives nerve impulses from preceding connected neurons through connections called dendrites. Each input received is amplified or reduced according to the receiving neuron's learned sensitivity to inputs originating from each connected sender neuron. Within the cell body, the adjusted input signals are aggregated and an output signal is calculated. The output signal is transmitted on the axon. The axon in turn is the connection point for multiple successive neurons.

The ability of the human brain to encode knowledge is realized by signals sent within a complex network of roughly 100 billion neurons, each neuron connected to several thousand other neurons2. Each neuron's ability to alter its sensitivity to the various inputs it receives contributes a tiny bit to the overall network's computational capacity.

Artificial neural networks originated from attempts to mimic the learning ability of the human brain3. In this section artificial neural networks are introduced. The first subsection outlines the perceptron and the multilayer perceptron neural network model. The second subsection introduces the back propagation algorithm, a parameter optimization method used to induce neural network classifiers. The third subsection introduces the concept of over fitting, as well as techniques that can be used to prevent over fitting. The last subsection lists advantages and disadvantages of MLP neural network classifiers.

Perceptrons and multilayer perceptrons

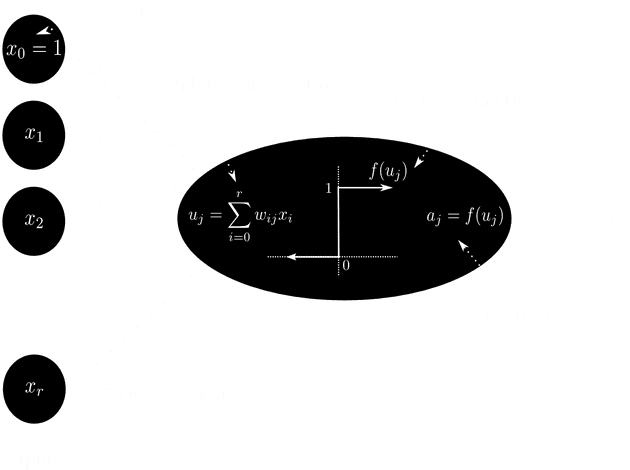

In the same manner as the human brain, an artificial neural network is composed of nodes connected by directed network links4. The net-input to some node is computed by combining the input signals to in a weighted sum and adding a node bias . This is,

where the connection weight from input node to node is and the bias is . By introducing an extra dummy input signal equal to one, the combination function can be written as5

with .

The node output is if the net-input is greater than or equal to , else the node output is . This hard limit function, or step function, can be written as

Such a node, called a perceptron, is depicted in the figure below.

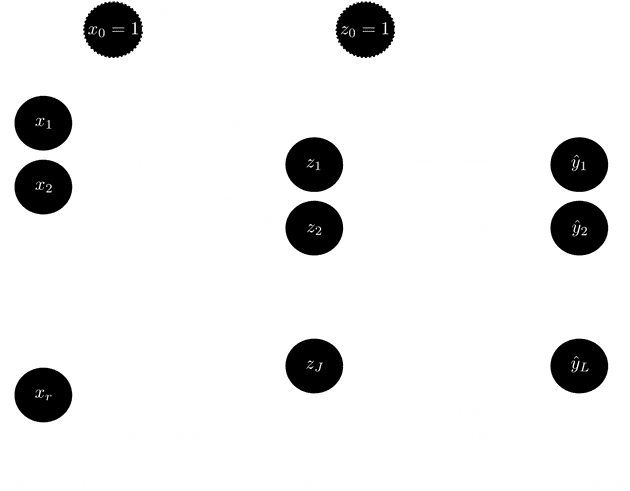

A feed-forward neural network is composed of multiple perceptrons interconnected in such a way as to allow the output of one perceptron to be the input to another perceptron5. The connections in a feed-forward network only allow signals to pass in one direction. Specifically, the multilayer perceptron (MLP) neural network is the most widely studied and used feed-forward neural network model3. Such a neural network is structured as three or more distinct layers: an input layer, one or more hidden layers, and an output layer. Each layer constitutes a set of nodes, with nodes from different layers being highly interconnected in a feed-forward manner: All nodes from the input layer are connected to all nodes in the first hidden layer, all nodes in the first hidden layer are connected to all nodes in the second hidden layer, and so forth, until all nodes in the last hidden layer are connected to all nodes in the output layer. The neural network maintains a vector of connection weights used to adjust the input signal as it propagates through the network towards the output layer. The structure of a three-layer MLP neural network is shown in the figure below.

This MLP neural network receives input signals at the input layer nodes. The input vector represents the input features to and the bias signal set equal to one. The input signal is propagated forward to hidden layer nodes. Each hidden layer node functions like a perceptron. The node input is computed as the weighted sum of inputs received from all preceding nodes. That is where is the weight of the connection between input node and hidden node .

The hidden layer's output is computed by passing the weighted sum of inputs through an activation function . This activation function can be any monotonically increasing function, such as the symmetric sigmoid function6,

The output of the hidden layer is a vector with the bias signal set to one. The vector supplies inputs to the output layer with nodes.

Similar to the nodes in the hidden layer, each output layer node functions like a perceptron. Input signals from the hidden layer nodes are combined in a weighted sum where is the weight of the connection from hidden node to output layer node . The output layer produces a vector . Each output node produces output by passing the weighted sum of inputs through a monotonically increasing activation function . For example, a logistic activation function can be used,

The entire MLP model can be represented as the following non-linear function,

for output , where and are the activation functions in the output layer and hidden layer respectively, and is the vector of connection weights4.

Each connection weight is used to adjust the input signal as it propagates through the network towards the output layer. By maintaining a weight for each connection in the network, a signal originating from a node can be very important in generating the output of one node and unimportant in generating the output of another node. It is through this process of connection specific signal weighting that a neural network has its predictive power. It has been shown that a three-layer MLP neural network with enough hidden layer nodes can approximate any continuous function with any desired degree of accuracy7.

The process in which the optimal weights vector is determined, hence the way the network ``learns", is commonly referred to as network training. The back propagation algorithm is a popular technique used for network training. Gaining a general understanding of this algorithm is important due to its significance in neural network literature and because it is used in the empirical study of this dissertation. The back propagation algorithm is outlined in the next subsection.

Notes

- Published as part of Wilgenbus, E.F., 2013. The file fragment classification problem: a combined neural network and linearprogramming discriminant model approach. Masters thesis, North West University.

Footnotes and references

Footnotes

-

Ramlall, I., 2010. Artifcial intelligence: Neural networks simplifed. InternationalResearch Journal of Finance and Economics (39), 105-120. ↩

-

Olson, D. L., Shi, Y., 2006. Introduction to Business Data Mining. Irwin-McGraw-Hill Series: Operations and Decision Sciences. McGraw-Hill. ↩

-

Zhang, G. P., 2010. Neural Networks For Data Mining. Data Mining and Knowledge Discovery Handbook. 2nd Edition. Springer Science and Business Media, Ch. 12, pp. 419-444. ↩ ↩2

-

Russel, S., Novig, P., 2010. Artifcial Intelligence: A modern approach, 3rd Edition. Pearson. ↩ ↩2

-

Bishop, C. M., 1995. Neural Networks for Pattern Recognition. Oxford University Press. ↩ ↩2

-

Steeb, W. H., 2005. The Nonlinear Workbook: Chaos, Fractals, Cellular Automata, Neural Networks, Genetic Algorithms, Gene Expression Programming, Support Vector Machine, Wavelets, Hidden Markov Models, Fuzzy Logic with C++, Java and Symbolic++ Programs, 3rd Edition. World Scientific. ↩

-

Hornik, K., Stinchcombe, M., White, H., 1989. Multilayer feedforward networks are universal approximators. Neural networks 2 (5), 359-366. ↩